In this year’s survey, one of the most significant takeaways is the sheer volume of data that researchers are handling. What else did we learn? Read on for the highlights!

Who did we survey?

The majority of respondents came from North America, with about a quarter of respondents residing in the UK and EU. The two largest groups of people conducting systematic reviews are those in the health economics and outcomes research (HEOR) field at 62%, and those doing reviews for policy or guideline development at 40%.

Some 64% of the individuals in the survey estimate doing about 1-10 literature reviews in the past 12 months, while 23% say they did between 11-50 literature reviews in the past 12 months.

Among the most exciting findings from the 2019 Survey on Literature Reviews was how these individuals deal with references and data.

Here’s a look at how researchers are finding and processing data and the challenges of systematic reviews in 2019.

Where are they finding references?

PubMed is hands-down, the most popular source for references. A staggering 97% of respondents indicated that they used PubMed to find potentially relevant citations. After PubMed, Embase (72%) and the Cochrane library (71%) were second and third most-used.

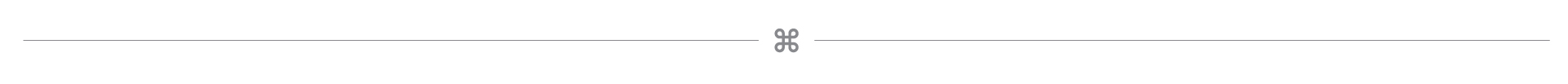

What kind of volume are we dealing with?

As you can likely imagine, screening that many references takes a lot of time.

After screening, most people (40%) say they have somewhere between 25-50 references left over. 28% say they have somewhere between 51-100 references leftover, and 16% say they have fewer than 25 references left over.

It’s no surprise, then, that 29% of people conducting systematic reviews said that a typical review takes about 1-3 months to complete, and 26% say it takes 3-6 months.

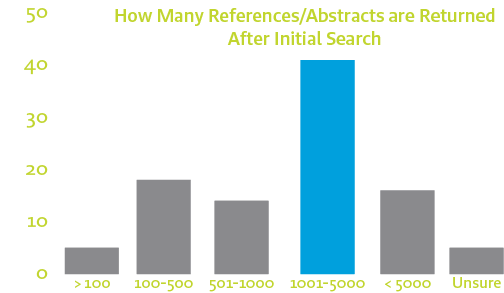

What are the biggest challenges?

For reviewers in 2019, there are a few clear trends when it comes to facing challenges with their systematic reviews. From our survey, the top three challenges of systematic reviews are:

This tells us that reviewers in 2019 might find it harder to procure useful data. They are spending more time searching through databases and screening, and they are having a challenge finding material that will make it past the screening phase.

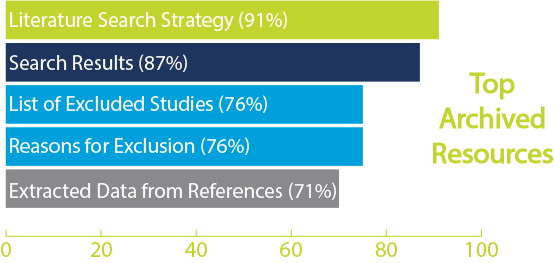

To save or not to save

Archiving data is critical for reviewers, but how do they decide what to archive and save versus what to purge?

The most common resources that get archived are:

How systematic review tools can help

From our 2017 survey, we learned that reviewers felt challenged by the amount of data they needed to weed through. They used a variety of systematic review tools, including spreadsheets, systematic review software, and internal data repositories to sort and store data. Most participants surveyed were methodological in their search strategy, but some felt they needed more guidance in proper methodology to ensure consistency and accuracy in their reviews. A majority of those surveyed said that finding time and resources was a significant challenge when conducting systematic reviews.

In 2019, we see more of the same, but many challenges are amplified by the fact that the amount of available data is growing steadily. Reviewers are looking for ways to make their processes faster, more efficient, and thorough. They also need solutions for storing large amounts of data — DistillerSR checks all of these boxes for individuals who need to perform comprehensive systematic reviews.

If you’re still using Excel (like 71% of those surveyed in 2019), you will be happy to know there is a better way. Read our blog about why spreadsheets are no longer a good option for reviewers.

DistillerSR streamlines systematic reviews by optimizing the screening process with templated forms and providing smart, AI-driven features like deduplication and reference scoring. There are also a ton of exciting new features coming soon that you can look forward to, all designed to make your systematic reviews more efficient and accurate.